Assisting the Adversary to Improve GAN Training

Andreas Munk, William Harvey & Frank Wood

IJCNN 2021

amunk@cs.ubc.ca

March 26, 2021

Table of Contents

- Closing the gap between theory and practice

- Generative adversarial networks (GANs) cite:goodfellow2014generative

- Why GANs?

- The optimal adversary assumption and the minimization of divergences

- Does an optimal adversary lead to optimal gradients?

- Adversary constructors

- Assisting the Adversary - introducing AdvAs

- Removing the hyperparameter \(\lambda\)

- Experiments

- MNIST and WGAN-GP cite:gulrajani2017improved

- CelebA and StyleGan cite:karras2020analyzing

- References

\( \newcommand{\ie}{i.e.} \newcommand{\eg}{e.g.} \newcommand{\etal}{\textit{et~al.}} \newcommand{\wrt}{w.r.t.} \newcommand{\bra}[1]{\langle #1 \mid} \newcommand{\ket}[1]{\mid #1\rangle} \newcommand{\braket}[2]{\langle #1 \mid #2 \rangle} \newcommand{\bigbra}[1]{\big\langle #1 \big\mid} \newcommand{\bigket}[1]{\big\mid #1 \big\rangle} \newcommand{\bigbraket}[2]{\big\langle #1 \big\mid #2 \big\rangle} \newcommand{\grad}{\boldsymbol{\nabla}} \newcommand{\divop}{\grad\scap} \newcommand{\pp}{\partial} \newcommand{\ppsqr}{\partial^2} \renewcommand{\vec}[1]{\boldsymbol{#1}} \newcommand{\trans}[1]{#1^\mr{T}} \newcommand{\dm}{\,\mathrm{d}} \newcommand{\complex}{\mathbb{C}} \newcommand{\real}{\mathbb{R}} \newcommand{\krondel}[1]{\delta_{#1}} \newcommand{\limit}[2]{\mathop{\longrightarrow}_{#1 \rightarrow #2}} \newcommand{\measure}{\mathbb{P}} \newcommand{\scap}{\!\cdot\!} \newcommand{\intd}[1]{\int\!\dm#1\: } \newcommand{\ave}[1]{\left\langle #1 \right\rangle} \newcommand{\br}[1]{\left\lbrack #1 \right\rbrack} \newcommand{\paren}[1]{\left(#1\right)} \newcommand{\tub}[1]{\left\{#1\right\}} \newcommand{\mr}[1]{\mathrm{#1}} \newcommand{\evalat}[1]{\left.#1\right\vert} \newcommand*{\given}{\mid} \newcommand{\abs}[1]{\left\lvert#1\right\rvert} \newcommand{\norm}[1]{\left\lVert#1\right\rVert} \newcommand{\figleft}{\em (Left)} \newcommand{\figcenter}{\em (Center)} \newcommand{\figright}{\em (Right)} \newcommand{\figtop}{\em (Top)} \newcommand{\figbottom}{\em (Bottom)} \newcommand{\captiona}{\em (a)} \newcommand{\captionb}{\em (b)} \newcommand{\captionc}{\em (c)} \newcommand{\captiond}{\em (d)} \newcommand{\newterm}[1]{\bf #1} \def\ceil#1{\lceil #1 \rceil} \def\floor#1{\lfloor #1 \rfloor} \def\1{\boldsymbol{1}} \newcommand{\train}{\mathcal{D}} \newcommand{\valid}{\mathcal{D_{\mathrm{valid}}}} \newcommand{\test}{\mathcal{D_{\mathrm{test}}}} \def\eps{\epsilon} \def\reta{\textnormal{$\eta$}} \def\ra{\textnormal{a}} \def\rb{\textnormal{b}} \def\rc{\textnormal{c}} \def\rd{\textnormal{d}} \def\re{\textnormal{e}} \def\rf{\textnormal{f}} \def\rg{\textnormal{g}} \def\rh{\textnormal{h}} \def\ri{\textnormal{i}} \def\rj{\textnormal{j}} \def\rk{\textnormal{k}} \def\rl{\textnormal{l}} \def\rn{\textnormal{n}} \def\ro{\textnormal{o}} \def\rp{\textnormal{p}} \def\rq{\textnormal{q}} \def\rr{\textnormal{r}} \def\rs{\textnormal{s}} \def\rt{\textnormal{t}} \def\ru{\textnormal{u}} \def\rv{\textnormal{v}} \def\rw{\textnormal{w}} \def\rx{\textnormal{x}} \def\ry{\textnormal{y}} \def\rz{\textnormal{z}} \def\rvepsilon{\mathbf{\epsilon}} \def\rvtheta{\mathbf{\theta}} \def\rva{\mathbf{a}} \def\rvb{\mathbf{b}} \def\rvc{\mathbf{c}} \def\rvd{\mathbf{d}} \def\rve{\mathbf{e}} \def\rvf{\mathbf{f}} \def\rvg{\mathbf{g}} \def\rvh{\mathbf{h}} \def\rvu{\mathbf{i}} \def\rvj{\mathbf{j}} \def\rvk{\mathbf{k}} \def\rvl{\mathbf{l}} \def\rvm{\mathbf{m}} \def\rvn{\mathbf{n}} \def\rvo{\mathbf{o}} \def\rvp{\mathbf{p}} \def\rvq{\mathbf{q}} \def\rvr{\mathbf{r}} \def\rvs{\mathbf{s}} \def\rvt{\mathbf{t}} \def\rvu{\mathbf{u}} \def\rvv{\mathbf{v}} \def\rvw{\mathbf{w}} \def\rvx{\mathbf{x}} \def\rvy{\mathbf{y}} \def\rvz{\mathbf{z}} \def\erva{\textnormal{a}} \def\ervb{\textnormal{b}} \def\ervc{\textnormal{c}} \def\ervd{\textnormal{d}} \def\erve{\textnormal{e}} \def\ervf{\textnormal{f}} \def\ervg{\textnormal{g}} \def\ervh{\textnormal{h}} \def\ervi{\textnormal{i}} \def\ervj{\textnormal{j}} \def\ervk{\textnormal{k}} \def\ervl{\textnormal{l}} \def\ervm{\textnormal{m}} \def\ervn{\textnormal{n}} \def\ervo{\textnormal{o}} \def\ervp{\textnormal{p}} \def\ervq{\textnormal{q}} \def\ervr{\textnormal{r}} \def\ervs{\textnormal{s}} \def\ervt{\textnormal{t}} \def\ervu{\textnormal{u}} \def\ervv{\textnormal{v}} \def\ervw{\textnormal{w}} \def\ervx{\textnormal{x}} \def\ervy{\textnormal{y}} \def\ervz{\textnormal{z}} \def\rmA{\mathbf{A}} \def\rmB{\mathbf{B}} \def\rmC{\mathbf{C}} \def\rmD{\mathbf{D}} \def\rmE{\mathbf{E}} \def\rmF{\mathbf{F}} \def\rmG{\mathbf{G}} \def\rmH{\mathbf{H}} \def\rmI{\mathbf{I}} \def\rmJ{\mathbf{J}} \def\rmK{\mathbf{K}} \def\rmL{\mathbf{L}} \def\rmM{\mathbf{M}} \def\rmN{\mathbf{N}} \def\rmO{\mathbf{O}} \def\rmP{\mathbf{P}} \def\rmQ{\mathbf{Q}} \def\rmR{\mathbf{R}} \def\rmS{\mathbf{S}} \def\rmT{\mathbf{T}} \def\rmU{\mathbf{U}} \def\rmV{\mathbf{V}} \def\rmW{\mathbf{W}} \def\rmX{\mathbf{X}} \def\rmY{\mathbf{Y}} \def\rmZ{\mathbf{Z}} \def\ermA{\textnormal{A}} \def\ermB{\textnormal{B}} \def\ermC{\textnormal{C}} \def\ermD{\textnormal{D}} \def\ermE{\textnormal{E}} \def\ermF{\textnormal{F}} \def\ermG{\textnormal{G}} \def\ermH{\textnormal{H}} \def\ermI{\textnormal{I}} \def\ermJ{\textnormal{J}} \def\ermK{\textnormal{K}} \def\ermL{\textnormal{L}} \def\ermM{\textnormal{M}} \def\ermN{\textnormal{N}} \def\ermO{\textnormal{O}} \def\ermP{\textnormal{P}} \def\ermQ{\textnormal{Q}} \def\ermR{\textnormal{R}} \def\ermS{\textnormal{S}} \def\ermT{\textnormal{T}} \def\ermU{\textnormal{U}} \def\ermV{\textnormal{V}} \def\ermW{\textnormal{W}} \def\ermX{\textnormal{X}} \def\ermY{\textnormal{Y}} \def\ermZ{\textnormal{Z}} \def\vzero{\boldsymbol{0}} \def\vone{\boldsymbol{1}} \def\vmu{\boldsymbol{\mu}} \def\vtheta{\boldsymbol{\theta}} \def\va{\boldsymbol{a}} \def\vb{\boldsymbol{b}} \def\vc{\boldsymbol{c}} \def\vd{\boldsymbol{d}} \def\ve{\boldsymbol{e}} \def\vf{\boldsymbol{f}} \def\vg{\boldsymbol{g}} \def\vh{\boldsymbol{h}} \def\vi{\boldsymbol{i}} \def\vj{\boldsymbol{j}} \def\vk{\boldsymbol{k}} \def\vl{\boldsymbol{l}} \def\vm{\boldsymbol{m}} \def\vn{\boldsymbol{n}} \def\vo{\boldsymbol{o}} \def\vp{\boldsymbol{p}} \def\vq{\boldsymbol{q}} \def\vr{\boldsymbol{r}} \def\vs{\boldsymbol{s}} \def\vt{\boldsymbol{t}} \def\vu{\boldsymbol{u}} \def\vv{\boldsymbol{v}} \def\vw{\boldsymbol{w}} \def\vx{\boldsymbol{x}} \def\vy{\boldsymbol{y}} \def\vz{\boldsymbol{z}} \def\evalpha{\alpha} \def\evbeta{\beta} \def\evepsilon{\epsilon} \def\evlambda{\lambda} \def\evomega{\omega} \def\evmu{\mu} \def\evpsi{\psi} \def\evsigma{\sigma} \def\evtheta{\theta} \def\eva{a} \def\evb{b} \def\evc{c} \def\evd{d} \def\eve{e} \def\evf{f} \def\evg{g} \def\evh{h} \def\evi{i} \def\evj{j} \def\evk{k} \def\evl{l} \def\evm{m} \def\evn{n} \def\evo{o} \def\evp{p} \def\evq{q} \def\evr{r} \def\evs{s} \def\evt{t} \def\evu{u} \def\evv{v} \def\evw{w} \def\evx{x} \def\evy{y} \def\evz{z} \def\mA{\boldsymbol{A}} \def\mB{\boldsymbol{B}} \def\mC{\boldsymbol{C}} \def\mD{\boldsymbol{D}} \def\mE{\boldsymbol{E}} \def\mF{\boldsymbol{F}} \def\mG{\boldsymbol{G}} \def\mH{\boldsymbol{H}} \def\mI{\boldsymbol{I}} \def\mJ{\boldsymbol{J}} \def\mK{\boldsymbol{K}} \def\mL{\boldsymbol{L}} \def\mM{\boldsymbol{M}} \def\mN{\boldsymbol{N}} \def\mO{\boldsymbol{O}} \def\mP{\boldsymbol{P}} \def\mQ{\boldsymbol{Q}} \def\mR{\boldsymbol{R}} \def\mS{\boldsymbol{S}} \def\mT{\boldsymbol{T}} \def\mU{\boldsymbol{U}} \def\mV{\boldsymbol{V}} \def\mW{\boldsymbol{W}} \def\mX{\boldsymbol{X}} \def\mY{\boldsymbol{Y}} \def\mZ{\boldsymbol{Z}} \def\mBeta{\boldsymbol{\beta}} \def\mPhi{\boldsymbol{\Phi}} \def\mLambda{\boldsymbol{\Lambda}} \def\mSigma{\boldsymbol{\Sigma}} \def\gA{\mathcal{A}} \def\gB{\mathcal{B}} \def\gC{\mathcal{C}} \def\gD{\mathcal{D}} \def\gE{\mathcal{E}} \def\gF{\mathcal{F}} \def\gG{\mathcal{G}} \def\gH{\mathcal{H}} \def\gI{\mathcal{I}} \def\gJ{\mathcal{J}} \def\gK{\mathcal{K}} \def\gL{\mathcal{L}} \def\gM{\mathcal{M}} \def\gN{\mathcal{N}} \def\gO{\mathcal{O}} \def\gP{\mathcal{P}} \def\gQ{\mathcal{Q}} \def\gR{\mathcal{R}} \def\gS{\mathcal{S}} \def\gT{\mathcal{T}} \def\gU{\mathcal{U}} \def\gV{\mathcal{V}} \def\gW{\mathcal{W}} \def\gX{\mathcal{X}} \def\gY{\mathcal{Y}} \def\gZ{\mathcal{Z}} \def\sA{\mathbb{A}} \def\sB{\mathbb{B}} \def\sC{\mathbb{C}} \def\sD{\mathbb{D}} \def\sF{\mathbb{F}} \def\sG{\mathbb{G}} \def\sH{\mathbb{H}} \def\sI{\mathbb{I}} \def\sJ{\mathbb{J}} \def\sK{\mathbb{K}} \def\sL{\mathbb{L}} \def\sM{\mathbb{M}} \def\sN{\mathbb{N}} \def\sO{\mathbb{O}} \def\sP{\mathbb{P}} \def\sQ{\mathbb{Q}} \def\sR{\mathbb{R}} \def\sS{\mathbb{S}} \def\sT{\mathbb{T}} \def\sU{\mathbb{U}} \def\sV{\mathbb{V}} \def\sW{\mathbb{W}} \def\sX{\mathbb{X}} \def\sY{\mathbb{Y}} \def\sZ{\mathbb{Z}} \def\emLambda{\Lambda} \def\emA{A} \def\emB{B} \def\emC{C} \def\emD{D} \def\emE{E} \def\emF{F} \def\emG{G} \def\emH{H} \def\emI{I} \def\emJ{J} \def\emK{K} \def\emL{L} \def\emM{M} \def\emN{N} \def\emO{O} \def\emP{P} \def\emQ{Q} \def\emR{R} \def\emS{S} \def\emT{T} \def\emU{U} \def\emV{V} \def\emW{W} \def\emX{X} \def\emY{Y} \def\emZ{Z} \def\emSigma{\Sigma} \newcommand{\etens}[1]{\mathsfit{#1}} \def\etLambda{\etens{\Lambda}} \def\etA{\etens{A}} \def\etB{\etens{B}} \def\etC{\etens{C}} \def\etD{\etens{D}} \def\etE{\etens{E}} \def\etF{\etens{F}} \def\etG{\etens{G}} \def\etH{\etens{H}} \def\etI{\etens{I}} \def\etJ{\etens{J}} \def\etK{\etens{K}} \def\etL{\etens{L}} \def\etM{\etens{M}} \def\etN{\etens{N}} \def\etO{\etens{O}} \def\etP{\etens{P}} \def\etQ{\etens{Q}} \def\etR{\etens{R}} \def\etS{\etens{S}} \def\etT{\etens{T}} \def\etU{\etens{U}} \def\etV{\etens{V}} \def\etW{\etens{W}} \def\etX{\etens{X}} \def\etY{\etens{Y}} \def\etZ{\etens{Z}} \newcommand{\pdata}{p_{\rm{data}}} \newcommand{\ptrain}{\hat{p}_{\rm{data}}} \newcommand{\Ptrain}{\hat{P}_{\rm{data}}} \newcommand{\pmodel}{p_{\rm{model}}} \newcommand{\Pmodel}{P_{\rm{model}}} \newcommand{\ptildemodel}{\tilde{p}_{\rm{model}}} \newcommand{\pencode}{p_{\rm{encoder}}} \newcommand{\pdecode}{p_{\rm{decoder}}} \newcommand{\precons}{p_{\rm{reconstruct}}} \newcommand{\laplace}{\mathrm{Laplace}} % Laplace distribution \newcommand{\E}{\mathbb{E}} \newcommand{\Ls}{\mathcal{L}} \newcommand{\R}{\mathbb{R}} \newcommand{\emp}{\tilde{p}} \newcommand{\lr}{\alpha} \newcommand{\reg}{\lambda} \newcommand{\rect}{\mathrm{rectifier}} \newcommand{\softmax}{\mathrm{softmax}} \newcommand{\sigmoid}{\sigma} \newcommand{\softplus}{\zeta} \newcommand{\KL}{D_{\mathrm{KL}}} \newcommand{\Var}{\mathrm{Var}} \newcommand{\standarderror}{\mathrm{SE}} \newcommand{\Cov}{\mathrm{Cov}} \newcommand{\normlzero}{L^0} \newcommand{\normlone}{L^1} \newcommand{\normltwo}{L^2} \newcommand{\normlp}{L^p} \newcommand{\normmax}{L^\infty} \newcommand{\parents}{Pa} % See usage in notation.tex. Chosen to match Daphne's book. \DeclareMathOperator*{\argmax}{arg\,max} \DeclareMathOperator*{\argmin}{arg\,min} \DeclareMathOperator{\sign}{sign} \DeclareMathOperator{\Tr}{Tr} \let\ab\allowbreak \newcommand{\vxlat}{\vx_{\mr{lat}}} \newcommand{\vxobs}{\vx_{\mr{obs}}} \newcommand{\block}[1]{\underbrace{\begin{matrix}1 & \cdots & 1\end{matrix}}_{#1}} \newcommand{\blockt}[1]{\begin{rcases} \begin{matrix} ~\\ ~\\ ~ \end{matrix} \end{rcases}{#1}} \newcommand{\tikzmark}[1]{\tikz[overlay,remember picture] \node (#1) {};} \)

Closing the gap between theory and practice

- The GAN framework is a minimax game, that pivots an adversary (critic/discriminator) against a generator

- The adversary is often assumed optimal in theoretical analysis and in the

construction of different GANs

- In practice this is essentially never true

- We consider a largely overlooked approach to constraining GAN training

mescheder2017numerics,nagarajan2017gradient

- Re-motivated to bridge the gap between theory and practice

- Accounts for the adversary being suboptimal - The Adversarys Assistant

(AdvAs) munk2020assisting

- A regularizer which encourages the generator to move towards points where the current adversary is optimal

Generative adversarial networks (GANs) goodfellow2014generative

- A generator is a neural network \(g:\gZ\rightarrow\gX\subseteq\real^{D_{x}}\)

which maps from a random vector \(\vz\in\gZ\) to an output \(\vx \in \gX\)

- \(g\) characterizes a distribution \(p_{\theta}(\vx)\), where \(\theta\in\Theta\subseteq\mathbb{R}^{D_g}\) denotes the generator’s parameters

- Aim is to match \(p_{\theta}\) with some real target distribution \(p_{\mr{true}}\)

- An adversary \(a_\phi:\gX\rightarrow \gA\) with parameters \(\phi\in\Phi\subseteq\real^{D_a}\)

Generally we frame GANs as a minimax game,

\[\min_{\theta}\max_{\phi}h(p_{\theta},a_\phi)\]

- If the minimax game of perfectly solved, then \(p_{\theta} = p_{\mr{true}}\)

Originally Goodfellow et al. (2014) defined \(\gA=\br{0,1}\)

\[ h(p_{\theta},a_\phi) = \mathbb{E}_{x\sim p_{\mr{true}}} \left[ \log a_\phi(x) \right] + \mathbb{E}_{x\sim p_{\theta}} \left[ \log (1- a_\phi(x)) \right].\]

Why GANs?

- Generative modeling using implicit densities

- Produce photo-realistic images zhou2019hype

- However, they tend to have unstable training dynamics mescheder2018which

- Primarily addressed using different GAN objectives or by using various regularization techniques when training the adversary

- We focus on regularizing the training of the generator

- Compatible with above-mentioned regularization techniques

The optimal adversary assumption and the minimization of divergences

- Before each generator update, the adversary is assumed optimal

- That is, if \(\gF\) is the class of permissible adversary functions, then for a particular \(\theta\) the adversary is optimal if \(a^{*}=\argmax_{a \in \gF}h(p_{\theta},a)\)

When \(a\) is parameterized by a neural net with parameters \(\phi\in\Phi\), we define

\[\Phi^*(\theta) = \{\phi \in \Phi \mid h(p_\theta, a_\phi) = \max_{a \in \gF}h(p_{\theta},a) \}\]

This assumption turns the two-player minimax game into a case of minimizing an objective w.r.t. \(\theta\) alone

\[ \gM(p_{\theta}) = \max_{a \in \gF}h(p_{\theta},a) = h(p_\theta, a_{\phi^*}) \quad \text{where} \quad \phi^*\in\Phi^*(\theta). \]

- For instance goodfellow2014generative showed that \(\gM(p_{\theta}) = 2\cdot\text{JSD}(p_{\mr{true}} || p_\theta) - \log 4\)

- Many other GAN approaches make use of the optimal adversary assumption to show that \(\gM(p_{\theta})\) reduces to some divergence (e.g. WGAN arjovsky2017wasserstein)

Does an optimal adversary lead to optimal gradients?

- The goal of training the generator can be seen as minimizing \(\gM(p_{\theta})\)

- In practice the generator update requires it’s gradient \(\grad_\theta \gM(p_{\theta})\) (e.g. gradient descent)

- Assume we have generator parameters \(\theta'\). Consider

\(\phi^*\in\Phi^*(\theta')\) such that \(h (p_{\theta'}, a_{\phi^*})\) and

\(\gM(p_{\theta'})\) are equal in value

- We want \(\grad_\theta \gM(p_{\theta})\mid_{\theta=\theta'}\) but calculate in

practice the partial derivative \(D_1 h (p_\theta,

a_{\phi^*})\mid_{\theta=\theta'}\)

- Where we defined \(D_1 h (p_\theta, a_\phi)\) to denote the partial derivative of \(h(p_\theta, a_\phi)\) with respect to \(\theta\) with \(\phi\) kept constant. Similarly, define \(D_2 h (p_\theta, a_\phi)\) to denote the derivative of \(h (p_\theta, a_\phi)\) with respect to \(\phi\), with \(\theta\) held constant.

- It is not immediately obvious these two quantities are equal

- We want \(\grad_\theta \gM(p_{\theta})\mid_{\theta=\theta'}\) but calculate in

practice the partial derivative \(D_1 h (p_\theta,

a_{\phi^*})\mid_{\theta=\theta'}\)

- By extending Theorem 1 in milgrom2002envelope we have that

Theorem 1. Let \(\gM(p_{\theta})=h(p_{\theta},a_{\phi^*})\) for any \(\phi^*\in\Phi^{*}(\theta)\). Assuming that \(\gM(p_{\theta})\) is differentiable w.r.t. \(\theta\) and \(h(p_{\theta},a_{\phi})\) is differentiable w.r.t. \(\theta\) for all \(\phi\in\Phi^{*}(\theta)\), then if \(\phi^{*}\in\Phi^{*}(\theta)\) we have \[ \grad_{\theta}\gM(p_{\theta})=D_1 h(p_{\theta},a_{\phi^{*}}) \]

Adversary constructors

An adversary constructor is a function \(f:\Theta\rightarrow\Phi\), where for all \(\theta \in \Theta\), \(f(\theta) = \phi^*\in\Phi^{*}(\theta)\),

\[h(p_{\theta},a_{f(\theta)})=\max_{a\in\gF}h(p_{\theta},a)\]

We cannot compute the function \(f(\theta)\) in general. However, they lead to a condition which must be satisfied for Theorem 1 to be invoked

Corollary 1. Let \(f:\Theta\rightarrow\Phi\) be a differentiable mapping such that for all \(\theta \in \Theta\), \(\gM(p_{\theta})=h(p_{\theta},a_{f(\theta)})\). If the conditions in Theorem 1 are satisfied and the Jacobian matrix of \(f\) with respect to \(\theta\), \(\mJ_{\theta}(f)\) exists for all \(\theta\in\Theta\) then

\begin{equation}\label{eq:cor} \trans{D_2 h(p_{\theta},a_{f(\theta)})} \mJ_{\theta}(f) = 0. \end{equation}

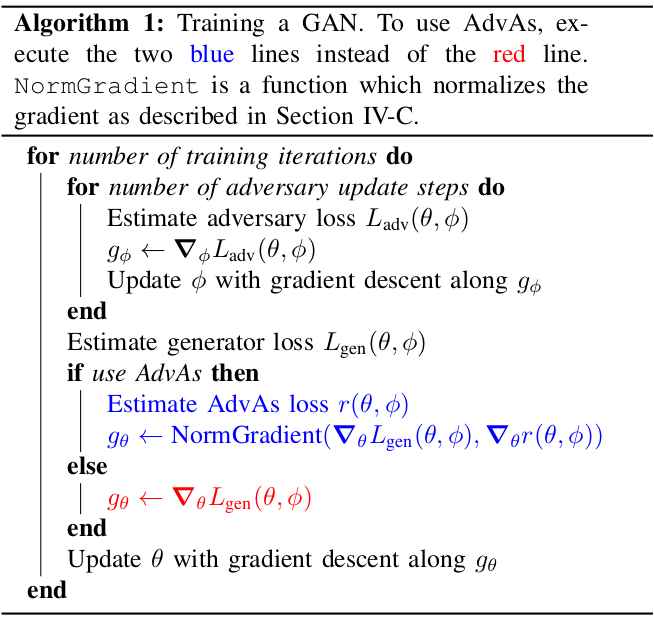

Assisting the Adversary - introducing AdvAs

- Eq. \ref{eq:cor} is a necessary condition for invoking Theorem 1

- \(\trans{D_2 h(p_{\theta},a_{f(\theta)})} \mJ_{\theta}(f)\) could be viewed as a

measure of “closeness”.

- Cannot be computed

- Can compute \(D_2 h(p_{\theta},a_{\phi})\)

- Use the magnitude as an approximate measure of how “close” we are to having an optimal adversary

Define the objective functions

\begin{align} \grad_\theta L_{\text{gen}}(\theta, \phi) &= \grad_\theta h(p_{\theta},a_\phi) \nonumber \\ \grad_\phi L_{\text{adv}}(\theta, \phi) &= -\grad_\phi h(p_{\theta},a_\phi) \nonumber \end{align}Using AdvAs implies

\[ L^{\mr{AdvAs}}_{\mr{gen}}(\theta,\phi) = L_{\mr{gen}}(\theta,\phi) + \lambda\cdot r(\theta,\phi) \]

with \(r(\theta,\phi) = \norm{\grad_\phi {L_{\mr{adv}}}(\theta,\phi)}_2^2\) and \(\lambda\) being a hyperparameter.

- Preserves convergence results:

- Using AdvAs does not interfere with adversary updates

- Assuming an optimal adversary we have \(L^{\mr{AdvAs}}_{\mr{gen}}(\theta,\phi) \rightarrow L_{\text{gen}}(\theta, \phi)\)

- Use an unbiased estimate \(\tilde{r}(\theta, \phi) \approx \norm{\grad_\phi

{L_{\mr{adv}}}(\theta,\phi)}_2^2\)

- consider two independent and unbiased estimates of \(\grad_\phi {L_{\mr{adv}}}(\theta,\phi)\) denoted \(\mX,\mX'\). Then \(\E\br{\trans{\mX}\mX'}=\trans{\E\br{\mX}}\E\br{\mX'}=\norm{\grad_{\phi}L_{\mr{adv}}(\theta,\phi)}_2^2\).

Removing the hyperparameter \(\lambda\)

- If \(\lambda\) is too large it may destabilize training. If too small it is equivalent to not using AdvAs.

Define

\begin{align*} \vg_{\mr{orig}}(\theta, \phi) &= \grad_{\theta}L_{\mr{gen}}(\theta,\phi) \\ \vg_{\mr{AdvAs}}(\theta, \phi) &= \grad_{\theta}\tilde{r}(\theta,\phi) \\ \vg_{\mr{total}}(\theta, \phi, \lambda) &= \grad_{\theta}L^{\mr{AdvAs}}_{\mr{gen}}(\theta,\phi) \\ &= \vg_{\mr{orig}}(\theta, \phi) + \lambda \vg_{\mr{AdvAs}}(\theta, \phi). \end{align*}- We set

Experiments

MNIST and WGAN-GP gulrajani2017improved

- The best FID score reached is improved by \(28\%\) when using AdvAs

Figure 1: FID scores throughout training for the WGAN-GP objective on MNIST, estimated using 60,000 samples from the generator. We plot up to a maximum of 40,000 iterations. When plotting against time (right), this means some lines end before the two hours we show. The blue line shows the results with AdvAs, while the others are baselines with different values of \(n_{\mr{adv}}\), the number of adversary updates per generator update.

CelebA and StyleGan karras2020analyzing

Figure 2: Bottom: FID scores throughout training estimated with \(1000\) samples, plotted against number of epochs (left) and training time (right). FID scores for AdvAs decrease more on each iteration at the start of training and converge to be \(7.5\%\) lower. Top: The left two columns show uncurated samples with and without AdvAs after 2 epochs. The rightmost two columns show uncurated samples from networks at the end of training. In each grid of images, each row is generated by a network with a different training seed and shows 3 images generated by passing a different random vector through this network. AdvAs leads to obvious qualitative improvement early in training.