Generative Modeling by Estimating Gradients of the Data Distribution

By Yang Song† & Stefano Ermon†

†Stanford University

Presented By

Andreas Munk

amunk@cs.ubc.ca

May 12, 2020

Table of Contents

- Generative Modeling by Estimating Gradients of the Data Distribution cite:song2019generative

- Langevin Dynamics cite:wibisono2018sampling

- Score Matching cite:hyvarinen2005estimation

- Sliced Score Matching cite:song2019sliced

- Denoising Score Matching cite:vincent2011connection

- Langevin Dynamics in the Noise Perturbed Manifold

- Why?

- Annealed Langevin Dynamics

- Practical Considerations

- Experiments

- Conclusion

- References

\( \newcommand{\ie}{i.e.} \newcommand{\eg}{e.g.} \newcommand{\etal}{\textit{et~al.}} \newcommand{\wrt}{w.r.t.} \newcommand{\bra}[1]{\langle #1 \mid} \newcommand{\ket}[1]{\mid #1\rangle} \newcommand{\braket}[2]{\langle #1 \mid #2 \rangle} \newcommand{\bigbra}[1]{\big\langle #1 \big\mid} \newcommand{\bigket}[1]{\big\mid #1 \big\rangle} \newcommand{\bigbraket}[2]{\big\langle #1 \big\mid #2 \big\rangle} \newcommand{\grad}{\boldsymbol{\nabla}} \newcommand{\divop}{\grad\scap} \newcommand{\pp}{\partial} \newcommand{\ppsqr}{\partial^2} \renewcommand{\vec}[1]{\boldsymbol{#1}} \newcommand{\trans}[1]{#1^\mr{T}} \newcommand{\dm}{\,\mathrm{d}} \newcommand{\complex}{\mathbb{C}} \newcommand{\real}{\mathbb{R}} \newcommand{\krondel}[1]{\delta_{#1}} \newcommand{\limit}[2]{\mathop{\longrightarrow}_{#1 \rightarrow #2}} \newcommand{\measure}{\mathbb{P}} \newcommand{\scap}{\!\cdot\!} \newcommand{\intd}[1]{\int\!\dm#1\: } \newcommand{\ave}[1]{\left\langle #1 \right\rangle} \newcommand{\br}[1]{\left\lbrack #1 \right\rbrack} \newcommand{\paren}[1]{\left(#1\right)} \newcommand{\tub}[1]{\left\{#1\right\}} \newcommand{\mr}[1]{\mathrm{#1}} \newcommand{\evalat}[1]{\left.#1\right\vert} \newcommand*{\given}{\mid} \newcommand{\abs}[1]{\left\lvert#1\right\rvert} \newcommand{\norm}[1]{\left\lVert#1\right\rVert} \newcommand{\figleft}{\em (Left)} \newcommand{\figcenter}{\em (Center)} \newcommand{\figright}{\em (Right)} \newcommand{\figtop}{\em (Top)} \newcommand{\figbottom}{\em (Bottom)} \newcommand{\captiona}{\em (a)} \newcommand{\captionb}{\em (b)} \newcommand{\captionc}{\em (c)} \newcommand{\captiond}{\em (d)} \newcommand{\newterm}[1]{\bf #1} \def\ceil#1{\lceil #1 \rceil} \def\floor#1{\lfloor #1 \rfloor} \def\1{\boldsymbol{1}} \newcommand{\train}{\mathcal{D}} \newcommand{\valid}{\mathcal{D_{\mathrm{valid}}}} \newcommand{\test}{\mathcal{D_{\mathrm{test}}}} \def\eps{\epsilon} \def\reta{\textnormal{$\eta$}} \def\ra{\textnormal{a}} \def\rb{\textnormal{b}} \def\rc{\textnormal{c}} \def\rd{\textnormal{d}} \def\re{\textnormal{e}} \def\rf{\textnormal{f}} \def\rg{\textnormal{g}} \def\rh{\textnormal{h}} \def\ri{\textnormal{i}} \def\rj{\textnormal{j}} \def\rk{\textnormal{k}} \def\rl{\textnormal{l}} \def\rn{\textnormal{n}} \def\ro{\textnormal{o}} \def\rp{\textnormal{p}} \def\rq{\textnormal{q}} \def\rr{\textnormal{r}} \def\rs{\textnormal{s}} \def\rt{\textnormal{t}} \def\ru{\textnormal{u}} \def\rv{\textnormal{v}} \def\rw{\textnormal{w}} \def\rx{\textnormal{x}} \def\ry{\textnormal{y}} \def\rz{\textnormal{z}} \def\rvepsilon{\mathbf{\epsilon}} \def\rvtheta{\mathbf{\theta}} \def\rva{\mathbf{a}} \def\rvb{\mathbf{b}} \def\rvc{\mathbf{c}} \def\rvd{\mathbf{d}} \def\rve{\mathbf{e}} \def\rvf{\mathbf{f}} \def\rvg{\mathbf{g}} \def\rvh{\mathbf{h}} \def\rvu{\mathbf{i}} \def\rvj{\mathbf{j}} \def\rvk{\mathbf{k}} \def\rvl{\mathbf{l}} \def\rvm{\mathbf{m}} \def\rvn{\mathbf{n}} \def\rvo{\mathbf{o}} \def\rvp{\mathbf{p}} \def\rvq{\mathbf{q}} \def\rvr{\mathbf{r}} \def\rvs{\mathbf{s}} \def\rvt{\mathbf{t}} \def\rvu{\mathbf{u}} \def\rvv{\mathbf{v}} \def\rvw{\mathbf{w}} \def\rvx{\mathbf{x}} \def\rvy{\mathbf{y}} \def\rvz{\mathbf{z}} \def\erva{\textnormal{a}} \def\ervb{\textnormal{b}} \def\ervc{\textnormal{c}} \def\ervd{\textnormal{d}} \def\erve{\textnormal{e}} \def\ervf{\textnormal{f}} \def\ervg{\textnormal{g}} \def\ervh{\textnormal{h}} \def\ervi{\textnormal{i}} \def\ervj{\textnormal{j}} \def\ervk{\textnormal{k}} \def\ervl{\textnormal{l}} \def\ervm{\textnormal{m}} \def\ervn{\textnormal{n}} \def\ervo{\textnormal{o}} \def\ervp{\textnormal{p}} \def\ervq{\textnormal{q}} \def\ervr{\textnormal{r}} \def\ervs{\textnormal{s}} \def\ervt{\textnormal{t}} \def\ervu{\textnormal{u}} \def\ervv{\textnormal{v}} \def\ervw{\textnormal{w}} \def\ervx{\textnormal{x}} \def\ervy{\textnormal{y}} \def\ervz{\textnormal{z}} \def\rmA{\mathbf{A}} \def\rmB{\mathbf{B}} \def\rmC{\mathbf{C}} \def\rmD{\mathbf{D}} \def\rmE{\mathbf{E}} \def\rmF{\mathbf{F}} \def\rmG{\mathbf{G}} \def\rmH{\mathbf{H}} \def\rmI{\mathbf{I}} \def\rmJ{\mathbf{J}} \def\rmK{\mathbf{K}} \def\rmL{\mathbf{L}} \def\rmM{\mathbf{M}} \def\rmN{\mathbf{N}} \def\rmO{\mathbf{O}} \def\rmP{\mathbf{P}} \def\rmQ{\mathbf{Q}} \def\rmR{\mathbf{R}} \def\rmS{\mathbf{S}} \def\rmT{\mathbf{T}} \def\rmU{\mathbf{U}} \def\rmV{\mathbf{V}} \def\rmW{\mathbf{W}} \def\rmX{\mathbf{X}} \def\rmY{\mathbf{Y}} \def\rmZ{\mathbf{Z}} \def\ermA{\textnormal{A}} \def\ermB{\textnormal{B}} \def\ermC{\textnormal{C}} \def\ermD{\textnormal{D}} \def\ermE{\textnormal{E}} \def\ermF{\textnormal{F}} \def\ermG{\textnormal{G}} \def\ermH{\textnormal{H}} \def\ermI{\textnormal{I}} \def\ermJ{\textnormal{J}} \def\ermK{\textnormal{K}} \def\ermL{\textnormal{L}} \def\ermM{\textnormal{M}} \def\ermN{\textnormal{N}} \def\ermO{\textnormal{O}} \def\ermP{\textnormal{P}} \def\ermQ{\textnormal{Q}} \def\ermR{\textnormal{R}} \def\ermS{\textnormal{S}} \def\ermT{\textnormal{T}} \def\ermU{\textnormal{U}} \def\ermV{\textnormal{V}} \def\ermW{\textnormal{W}} \def\ermX{\textnormal{X}} \def\ermY{\textnormal{Y}} \def\ermZ{\textnormal{Z}} \def\vzero{\boldsymbol{0}} \def\vone{\boldsymbol{1}} \def\vmu{\boldsymbol{\mu}} \def\vtheta{\boldsymbol{\theta}} \def\va{\boldsymbol{a}} \def\vb{\boldsymbol{b}} \def\vc{\boldsymbol{c}} \def\vd{\boldsymbol{d}} \def\ve{\boldsymbol{e}} \def\vf{\boldsymbol{f}} \def\vg{\boldsymbol{g}} \def\vh{\boldsymbol{h}} \def\vi{\boldsymbol{i}} \def\vj{\boldsymbol{j}} \def\vk{\boldsymbol{k}} \def\vl{\boldsymbol{l}} \def\vm{\boldsymbol{m}} \def\vn{\boldsymbol{n}} \def\vo{\boldsymbol{o}} \def\vp{\boldsymbol{p}} \def\vq{\boldsymbol{q}} \def\vr{\boldsymbol{r}} \def\vs{\boldsymbol{s}} \def\vt{\boldsymbol{t}} \def\vu{\boldsymbol{u}} \def\vv{\boldsymbol{v}} \def\vw{\boldsymbol{w}} \def\vx{\boldsymbol{x}} \def\vy{\boldsymbol{y}} \def\vz{\boldsymbol{z}} \def\evalpha{\alpha} \def\evbeta{\beta} \def\evepsilon{\epsilon} \def\evlambda{\lambda} \def\evomega{\omega} \def\evmu{\mu} \def\evpsi{\psi} \def\evsigma{\sigma} \def\evtheta{\theta} \def\eva{a} \def\evb{b} \def\evc{c} \def\evd{d} \def\eve{e} \def\evf{f} \def\evg{g} \def\evh{h} \def\evi{i} \def\evj{j} \def\evk{k} \def\evl{l} \def\evm{m} \def\evn{n} \def\evo{o} \def\evp{p} \def\evq{q} \def\evr{r} \def\evs{s} \def\evt{t} \def\evu{u} \def\evv{v} \def\evw{w} \def\evx{x} \def\evy{y} \def\evz{z} \def\mA{\boldsymbol{A}} \def\mB{\boldsymbol{B}} \def\mC{\boldsymbol{C}} \def\mD{\boldsymbol{D}} \def\mE{\boldsymbol{E}} \def\mF{\boldsymbol{F}} \def\mG{\boldsymbol{G}} \def\mH{\boldsymbol{H}} \def\mI{\boldsymbol{I}} \def\mJ{\boldsymbol{J}} \def\mK{\boldsymbol{K}} \def\mL{\boldsymbol{L}} \def\mM{\boldsymbol{M}} \def\mN{\boldsymbol{N}} \def\mO{\boldsymbol{O}} \def\mP{\boldsymbol{P}} \def\mQ{\boldsymbol{Q}} \def\mR{\boldsymbol{R}} \def\mS{\boldsymbol{S}} \def\mT{\boldsymbol{T}} \def\mU{\boldsymbol{U}} \def\mV{\boldsymbol{V}} \def\mW{\boldsymbol{W}} \def\mX{\boldsymbol{X}} \def\mY{\boldsymbol{Y}} \def\mZ{\boldsymbol{Z}} \def\mBeta{\boldsymbol{\beta}} \def\mPhi{\boldsymbol{\Phi}} \def\mLambda{\boldsymbol{\Lambda}} \def\mSigma{\boldsymbol{\Sigma}} \def\gA{\mathcal{A}} \def\gB{\mathcal{B}} \def\gC{\mathcal{C}} \def\gD{\mathcal{D}} \def\gE{\mathcal{E}} \def\gF{\mathcal{F}} \def\gG{\mathcal{G}} \def\gH{\mathcal{H}} \def\gI{\mathcal{I}} \def\gJ{\mathcal{J}} \def\gK{\mathcal{K}} \def\gL{\mathcal{L}} \def\gM{\mathcal{M}} \def\gN{\mathcal{N}} \def\gO{\mathcal{O}} \def\gP{\mathcal{P}} \def\gQ{\mathcal{Q}} \def\gR{\mathcal{R}} \def\gS{\mathcal{S}} \def\gT{\mathcal{T}} \def\gU{\mathcal{U}} \def\gV{\mathcal{V}} \def\gW{\mathcal{W}} \def\gX{\mathcal{X}} \def\gY{\mathcal{Y}} \def\gZ{\mathcal{Z}} \def\sA{\mathbb{A}} \def\sB{\mathbb{B}} \def\sC{\mathbb{C}} \def\sD{\mathbb{D}} \def\sF{\mathbb{F}} \def\sG{\mathbb{G}} \def\sH{\mathbb{H}} \def\sI{\mathbb{I}} \def\sJ{\mathbb{J}} \def\sK{\mathbb{K}} \def\sL{\mathbb{L}} \def\sM{\mathbb{M}} \def\sN{\mathbb{N}} \def\sO{\mathbb{O}} \def\sP{\mathbb{P}} \def\sQ{\mathbb{Q}} \def\sR{\mathbb{R}} \def\sS{\mathbb{S}} \def\sT{\mathbb{T}} \def\sU{\mathbb{U}} \def\sV{\mathbb{V}} \def\sW{\mathbb{W}} \def\sX{\mathbb{X}} \def\sY{\mathbb{Y}} \def\sZ{\mathbb{Z}} \def\emLambda{\Lambda} \def\emA{A} \def\emB{B} \def\emC{C} \def\emD{D} \def\emE{E} \def\emF{F} \def\emG{G} \def\emH{H} \def\emI{I} \def\emJ{J} \def\emK{K} \def\emL{L} \def\emM{M} \def\emN{N} \def\emO{O} \def\emP{P} \def\emQ{Q} \def\emR{R} \def\emS{S} \def\emT{T} \def\emU{U} \def\emV{V} \def\emW{W} \def\emX{X} \def\emY{Y} \def\emZ{Z} \def\emSigma{\Sigma} \newcommand{\etens}[1]{\mathsfit{#1}} \def\etLambda{\etens{\Lambda}} \def\etA{\etens{A}} \def\etB{\etens{B}} \def\etC{\etens{C}} \def\etD{\etens{D}} \def\etE{\etens{E}} \def\etF{\etens{F}} \def\etG{\etens{G}} \def\etH{\etens{H}} \def\etI{\etens{I}} \def\etJ{\etens{J}} \def\etK{\etens{K}} \def\etL{\etens{L}} \def\etM{\etens{M}} \def\etN{\etens{N}} \def\etO{\etens{O}} \def\etP{\etens{P}} \def\etQ{\etens{Q}} \def\etR{\etens{R}} \def\etS{\etens{S}} \def\etT{\etens{T}} \def\etU{\etens{U}} \def\etV{\etens{V}} \def\etW{\etens{W}} \def\etX{\etens{X}} \def\etY{\etens{Y}} \def\etZ{\etens{Z}} \newcommand{\pdata}{p_{\rm{data}}} \newcommand{\ptrain}{\hat{p}_{\rm{data}}} \newcommand{\Ptrain}{\hat{P}_{\rm{data}}} \newcommand{\pmodel}{p_{\rm{model}}} \newcommand{\Pmodel}{P_{\rm{model}}} \newcommand{\ptildemodel}{\tilde{p}_{\rm{model}}} \newcommand{\pencode}{p_{\rm{encoder}}} \newcommand{\pdecode}{p_{\rm{decoder}}} \newcommand{\precons}{p_{\rm{reconstruct}}} \newcommand{\laplace}{\mathrm{Laplace}} % Laplace distribution \newcommand{\E}{\mathbb{E}} \newcommand{\Ls}{\mathcal{L}} \newcommand{\R}{\mathbb{R}} \newcommand{\emp}{\tilde{p}} \newcommand{\lr}{\alpha} \newcommand{\reg}{\lambda} \newcommand{\rect}{\mathrm{rectifier}} \newcommand{\softmax}{\mathrm{softmax}} \newcommand{\sigmoid}{\sigma} \newcommand{\softplus}{\zeta} \newcommand{\KL}{D_{\mathrm{KL}}} \newcommand{\Var}{\mathrm{Var}} \newcommand{\standarderror}{\mathrm{SE}} \newcommand{\Cov}{\mathrm{Cov}} \newcommand{\normlzero}{L^0} \newcommand{\normlone}{L^1} \newcommand{\normltwo}{L^2} \newcommand{\normlp}{L^p} \newcommand{\normmax}{L^\infty} \newcommand{\parents}{Pa} % See usage in notation.tex. Chosen to match Daphne's book. \DeclareMathOperator*{\argmax}{arg\,max} \DeclareMathOperator*{\argmin}{arg\,min} \DeclareMathOperator{\sign}{sign} \DeclareMathOperator{\Tr}{Tr} \let\ab\allowbreak \newcommand{\vxlat}{\vx_{\mr{lat}}} \newcommand{\vxobs}{\vx_{\mr{obs}}} \newcommand{\block}[1]{\underbrace{\begin{matrix}1 & \cdots & 1\end{matrix}}_{#1}} \newcommand{\blockt}[1]{\begin{rcases} \begin{matrix} ~\\ ~\\ ~ \end{matrix} \end{rcases}{#1}} \newcommand{\tikzmark}[1]{\tikz[overlay,remember picture] \node (#1) {};} \)

Generative Modeling by Estimating Gradients of the Data Distribution song2019generative

- Likelihood-free generative modeling

- Propose an annealed Langevin Dynamics

- Based on score matching

- Main benefits:

- No adversarial methods

- Does not require sampling from the model during training

- Provides for a principled way for model comparison (within the same framework)

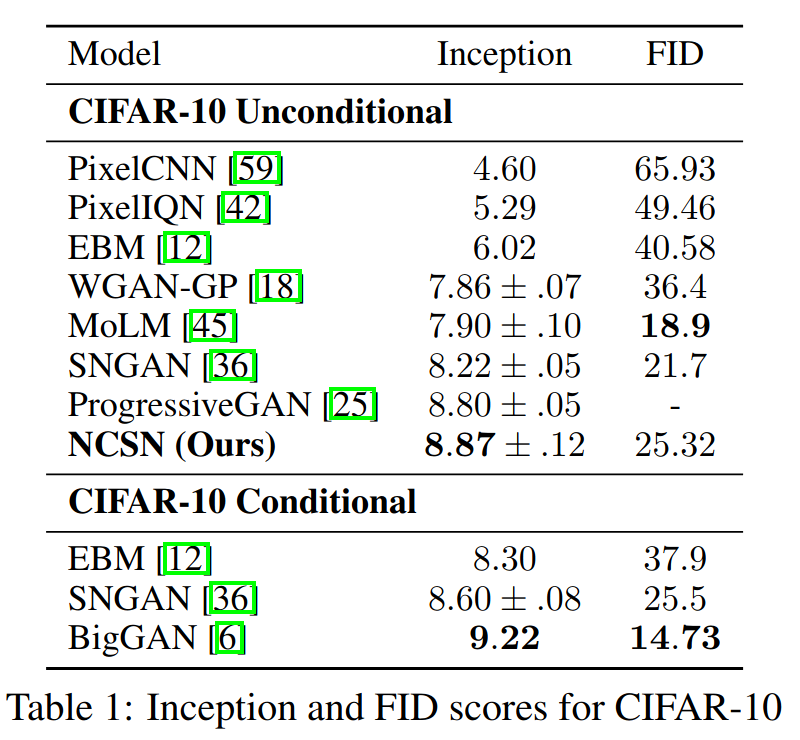

- Achieve state-of-the-art inception score on CIFAR-10

Langevin Dynamics wibisono2018sampling

- \(X\) is a random variable in \(\real^{d}\)

- \(p\) is a differentiable target density

- \(W\) is the standard Brownian motion (in \(\real^{d}\))

- Langevin Dynamics are: \[\dm{X}_{t} = \grad \ln p(X_t)\dm{t} + \sqrt{2}\dm{W}_{t}\] with the distribution of \(X_{t}\) following these dynamics converging to \(p\)

- The discretized version for small time steps \(\epsilon\) is \[\Delta \vx = \grad_{\vx}\ln p(\vx)\epsilon + \vz,\quad \vz\sim\mN(0,\sqrt{2\epsilon}\mI)\]

- Equivalently \[\vx_{t+1}\sim\mN(\vx_{t}+\grad_{\vx}\ln p(\vx)\epsilon, \sqrt{2\epsilon}\mI)\]

- May correct for the discretization error using Metropolis-Hastings with proposal \[q(\vx_{t+1}|\vx_{t}) =\mN(\vx_{t}+\grad_{\vx}\ln p(\vx)\epsilon, \sqrt{2\epsilon}\mI)\]

- Assuming \(\epsilon\) is small enough and t is sufficiently large, the acceptance rate goes to one

- Used also for inference - see e.g. Stochastic Gradient Langevin Dynamics welling2011bayesian

- Think of Langevin Dynamics as a form of a Markov Chain

Score Matching hyvarinen2005estimation

- Can we estimate \(\grad_{\vx}\ln p(\vx)\)?

- Define \(s_{\theta}(\vx)=\grad_{\vx}\ln q_{\theta}(\vx), ~s_{\theta}:\real^{d}\rightarrow\real^{d}\)

- Train \(s_{\theta}\) to match \(\grad_{\vx}\ln p(\vx)\) by minimizing, \[\begin{align*}J(\theta)&=\frac{1}{2}\E_{p(\vx)}\br{\norm{s_{\theta}(\vx) - \grad_{\vx}\ln p(\vx)}^2} \\ &= \E_{p(\vx)}\br{\mr{tr}(\grad_{\vx}s_{\theta}(\vx)) + \frac{1}{2}\norm{s_{\theta}(\vx)}^2} + \mr{const}\end{align*}\]

- Consistency: Assuming \(\exists\theta^{*}(s_{\theta^{*}}(\vx)=\grad_{\vx}\ln p(\vx))\) then \(J(\theta)=0\Leftrightarrow\theta=\theta^{*}\)

- Involves Jacobian of (Hessian of \(q\))

- Weak regularity conditions:

- \(p(\vx)\) is differentiable

- \(\E_{p(\vx)}\br{\norm{s_{\theta}(\vx)}^{2}}<\infty,~\forall \theta\)

- \(\E_{p(\vx)}\br{\norm{\grad_{\vx}\ln p(\vx)}^{2}}<\infty\)

- \(\lim\limits_{\norm{\vx}\rightarrow\infty} p(\vx)s_{\theta}(\vx)=\vec{0},~\forall \theta\)

Proofs of the Objective Reduction

- We need only consider \(-\intd{\vx}p(\vx)\trans{s_{\theta}(\vx)}\grad_{\vx}\ln p(\vx)=-\sum_{i}\intd{\vx}p(\vx)s_{i}(\vx)\frac{\pp \ln p(\vx)}{\pp x_{i}}=-\sum_{i}\intd{\vx}s_{i}(\vx)\frac{\pp p(\vx)}{\pp x_{i}}\), where \(s_{i}(\vx)=s_{\theta}(\vx)_{i}\)

- We restrict our attention to a single \(i\) as the derivation holds for every \(i\)

- Using the shorthand notation \(\pp_{i}f(\vx)=\frac{\pp f(\vx)}{\pp x_{i}}\) we now prove that \[\intd{\vx}s_{i}(\vx)\pp_{i}p(\vx)=-\intd{\vx}p(\vx)\pp_{i}s_{i}(\vx)\]

- Recall that for a differentiable function \(h(\vx)=p(\vx)s_{i}(\vx)\) we have \[\pp_{i}h(\vx)=\pp_{i}\paren{p(\vx)s_{i}(\vx)} = p(\vx)\pp_{i}s_{i}(\vx)+s_{i}(\vx)\pp_{i}p(\vx)\] and so \[\int_{b}^{a}\dm{x_{i}}\pp_{i}h(\vx) = h(x_{1},\dots,x_{i-1},a,x_{i+1},\dots,x_{d}) -h(x_{1},\dots,x_{i-1},b,x_{i+1},\dots,x_{d}) = \int_{b}^{a}p(\vx)\pp_{i}s_{i}(\vx)+s_{i}(\vx)\pp_{i}p(\vx)\dm{x_{i}}\]

- From the regularity conditions we have \(\lim\limits_{\norm{\vx}\rightarrow\infty} p(\vx)s_{\theta}(\vx)=\vec{0},~\forall \theta\), \[\int_{-\infty}^{\infty}\dm{x_{i}}\pp_{i}h(\vx) = \lim\limits_{a\rightarrow\infty,b\rightarrow-\infty} \br{h(x_{1},\dots,x_{i-1},a,x_{i+1},\dots,x_{d}) -h(x_{1},\dots,x_{i-1},b,x_{i+1},\dots,x_{d})}=0\] \[\Downarrow\] \[\int_{-\infty}^{\infty}p(\vx)\pp_{i}s_{i}(\vx)\dm{x_{i}} = -\int_{-\infty}^{\infty}s_{i}(\vx)\pp_{i}p(\vx)\dm{x_{i}}\]

- Additional outer integrals does not change this and so we have \[\intd{\vx}s_{i}(\vx)\pp_{i}p(\vx)=-\intd{\vx}p(\vx)\pp_{i}s_{i}(\vx) = -\E_{p(\vx)}\br{\pp_{i}s_{i}(\vx)}\]

- Identifying \[\sum_{i=1}^{d}\pp_{i}s_{i}(\vx) = \mr{tr}(\grad_{\vx}s_{\theta}(\vx))\] concludes the proof

Sliced Score Matching song2019sliced

- Preferably no Jacobian in score matching

- Projects the score functions onto a random direction \(\vv\in\real^{d}\)

- Leads to the following objective

\[\begin{align*}J_{\mr{SSM}}(\theta)

&=\frac{1}{2}\E_{p(\vv)}\br{\E_{p(\vx)}\br{\norm{\trans{\vv} s_{\theta}(\vx) -

\trans{\vv}\grad_{\vx}\ln p(\vx)}^2}}

\\ &=\E_{p(\vv)}\br{\E_{p(\vx)}\br{\trans{\vv}\grad_{\vx}s_{\theta}(\vx)\vv +

\frac{1}{2}(\trans{\vv} s_{\theta}(\vx))^{2}}} + \mr{const}\end{align*}\]

- If \(p(\vv)=\mN(0,\mI)\), \[\E_{p(\vv)}\br{(\trans{\vv} s_{\theta}(\vx))^{2}} =\norm{s_{\theta}(\vx)}^{2}\]

- Similar weak regularity conditions and satisfies consistency

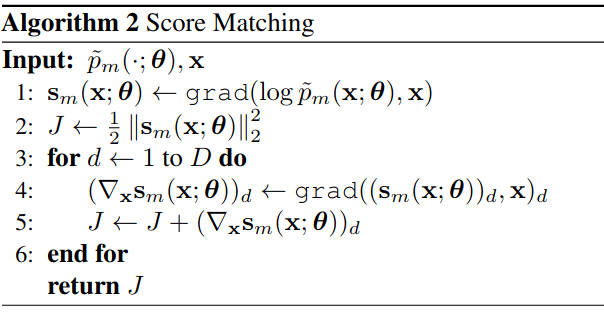

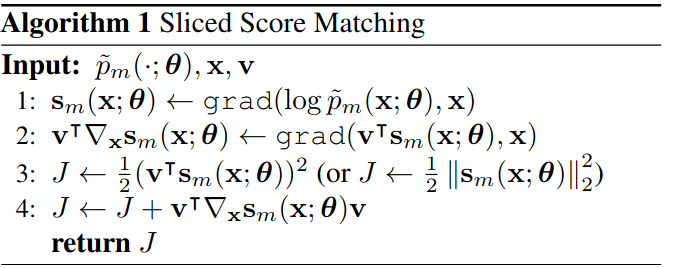

Algorithmic Differences

Figure 1: Score matching algorithm

Figure 2: Sliced score matching algorithm

Denoising Score Matching vincent2011connection

- Induce noise pertubation of the data via some “pertubation” distribution

- \(\sigma\) denotes noise level

- Objective: \[\begin{align*}J_{\mr{NSM}}(\theta) &=\frac{1}{2}\E_{p_{\sigma}(\tilde{\vx}|\vx)p(\vx)}\br{ \norm{s_{\theta}(\tilde{\vx}) - \grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx}|\vx)}^2} \\ &=\E_{p_{\sigma}(\tilde{\vx}|\vx)p(\vx)}\br{ \frac{1}{2}\norm{s_{\theta}(\tilde{\vx})}^{2} - \trans{s_{\theta}(\tilde{\vx})}\grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx}|\vx)} + \mr{const}\end{align*}\]

- No Jacobian term

- Minimizing \(J_{\mr{NSM}}\) is equivalent to solving for the optimal \(\theta^{*}\) such that \[s_{\theta^{*}}(\tilde{\vx}) = \grad_{\tilde{\vx}}\ln\intd{\vx p(\vx)} p_{\sigma}(\tilde{\vx}|\vx) =\grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx})\]

- Notice that as \(\sigma\rightarrow 0\) then

\(p_{\sigma}(\tilde{\vx})\rightarrow p(\vx)\)

- Exploited by song2019generative

Proofs of the Minimization Equivalence

- We prove that minimizing \(J_{\mr{NSM}}(\theta)\) is equivalent to minimizing \[J_{\mr{D}}(\theta)=\frac{1}{2}\E_{p_{\sigma}(\tilde{\vx})}\br{ \norm{s_{\theta}(\tilde{\vx}) - \grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx})}^2}\]

- We need only show that \[\begin{equation}\label{eq:to-prove}\E_{p_{\sigma}(\tilde{\vx})}\br{ \trans{s_{\theta}(\tilde{\vx})}\grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx})} = \E_{p_{\sigma}(\tilde{\vx}|\vx)p(\vx)}\br{ \trans{s_{\theta}(\tilde{\vx})}\grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx}|\vx)}\end{equation}\]

- Notice that \[\begin{equation}\label{eq:grad-marg}\grad_{{\tilde{\vx}}}\ln p_{\sigma}(\tilde{\vx}) = \grad_{\tilde{\vx}}\ln\intd{\vx}p_{\sigma}(\tilde{\vx}|\vx)p(\vx) = \frac{1}{p_{\sigma}(\tilde{\vx})} \intd{\vx}\grad_{\tilde{\vx}}p_{\sigma}(\tilde{\vx}|\vx)p(\vx) \end{equation}\]

- Substituting \ref{eq:grad-marg} into the expression of the left-hand side of \ref{eq:to-prove} we find \[\begin{align*}\E_{p_{\sigma}(\tilde{\vx})}\br{ \trans{s_{\theta}(\tilde{\vx})}\grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx})} &= \int\trans{s_{\theta}(\tilde{\vx})} \int\grad_{\tilde{\vx}}p_{\sigma}(\tilde{\vx}|\vx)p(\vx) \dm{\vx}\dm{\tilde{\vx}} \\ &= \int\int \trans{s_{\theta}(\tilde{\vx})}\grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx}|\vx)p_{\sigma}(\tilde{\vx}|\vx)p(\vx) \dm{\vx}\dm{\tilde{\vx}} \\ &= \E_{p_{\sigma}(\tilde{\vx}|\vx)p(\vx)}\br{ \trans{s_{\theta}(\tilde{\vx})}\grad_{\tilde{\vx}}\ln p_{\sigma}(\tilde{\vx}|\vx)}\end{align*}\]

- Leading to \[J_{\mr{DSM}}(\theta)=J_{\mr{D}}(\theta) + C,\] where \(C\) is a constant equal to the difference between the constant terms of \(J_{\mr{NSM}}(\theta)\) and \(J_{\mr{D}}(\theta)\)

Langevin Dynamics in the Noise Perturbed Manifold

- Perturb data with Gaussian noise - \(\mN(0,\sigma)\)

- Train noise conditional score estimator

\(s_{\theta}(\tilde{\vx},\sigma)\approx\grad_{\tilde{\vx}}\ln

p_{\sigma}(\tilde{\vx})\) (Deep Neural Network)

- Called Noise Conditional Score Network (NCSN)

- Employ Langevin Dynamics in the perturbed space using

\(s_{\theta}(\tilde{\vx},\sigma)\)

- Ensured to be in \(\real^{d}\)

Why?

- The denoising score matching objective has no Jacobian terms

- The theory behind score matching requires a well-defined \(p(\vx)\) everywhere

in \(\real^{d}\)

- Typically \(p(\vx)\) has a support restricted to some low dimensional manifold embedding in the ambient space

- Langevin dynamics (LD) is likely to mix poorly if \(p(\vx)\) has large regions

with near-zero probability

- May additionally lead to poor training of \(s_{\theta}(\vx)\)

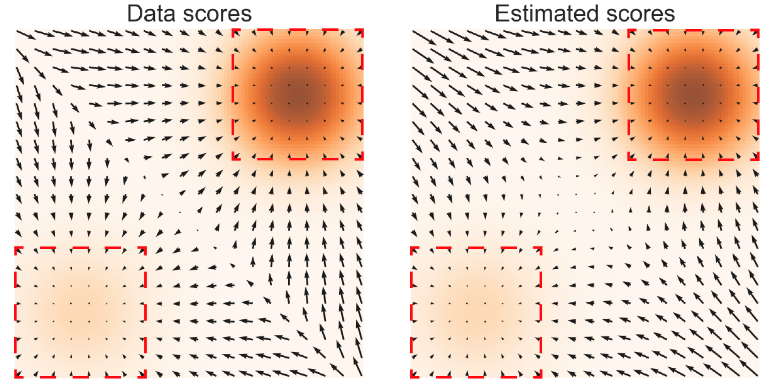

\(p(\vx)=\frac{1}{5}\mN((-5,-5),\mI) + \frac{4}{5}\mN((5,5),\mI)\)

Figure 3: Left \(\grad_{\vx}\ln p(\vx)\). Right \(s_{\theta}(\vx)\). Darker color implies higher density. Squares are regions where \(s_{\theta}(\vx)\approx\grad_{\vx}\ln p(\vx)\)

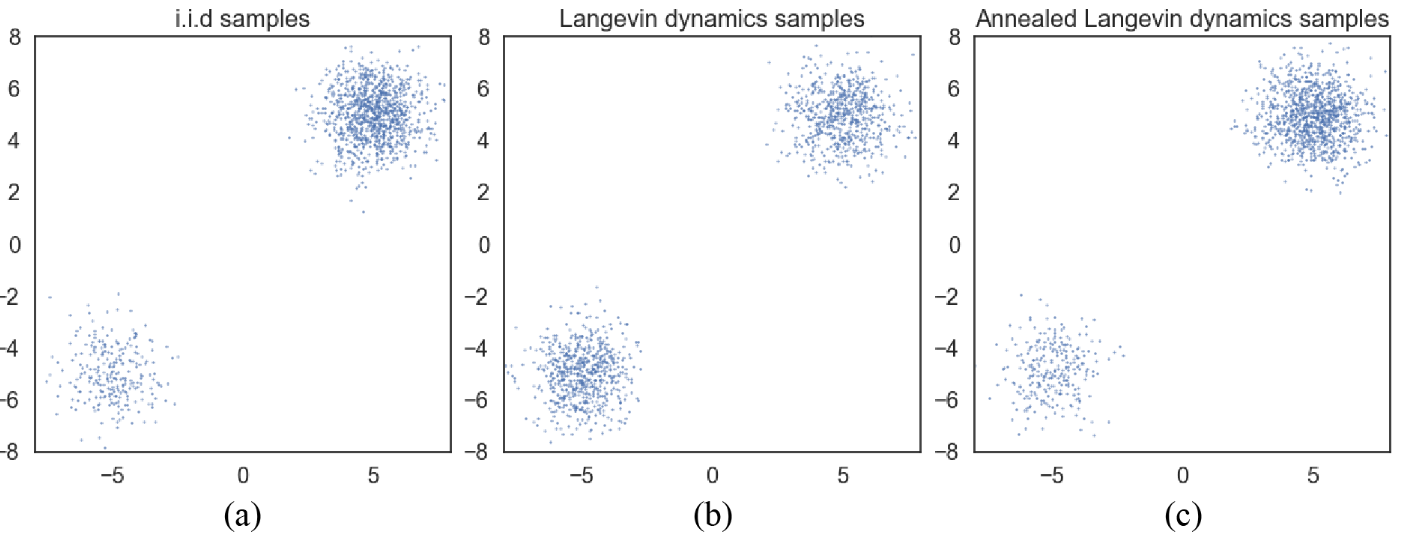

\(p(\vx)=\frac{1}{5}\mN((-5,-5),\mI) + \frac{4}{5}\mN((5,5),\mI)\)

Figure 4: Samples from \(p(\vx)\) using different methods. a) Exact sampling b) Using LD with exact score c) Annealed LD

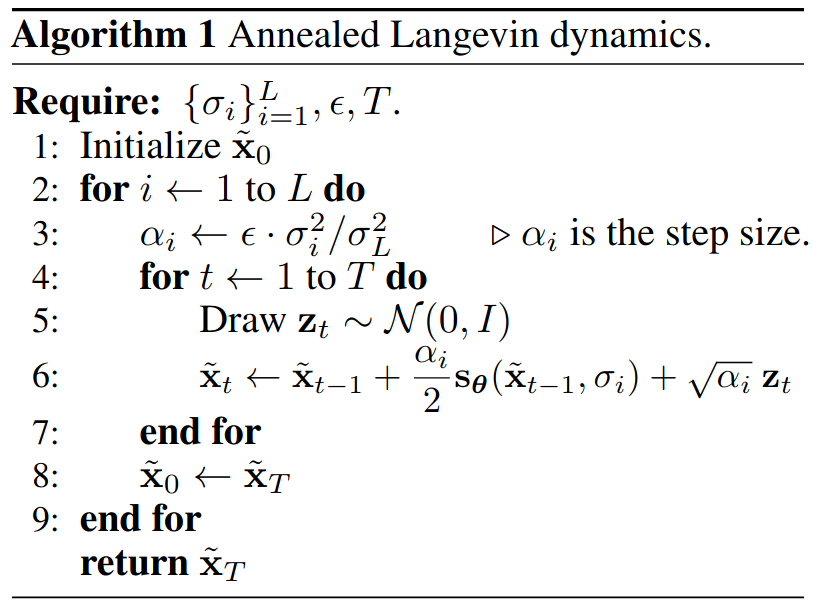

Annealed Langevin Dynamics

- Intuition:

- Large noise level forms “bridges” across regions with low probability

- Improves mixing of the Langevin dynamics

- Anneal noise level to converge to “true” data distribution

- Define noise levels as a geometric sequence \(\tub{\sigma_{i}}_{i=1}^{L}\)

- \(\frac{\sigma_{1}}{\sigma_{2}}=\dots=\frac{\sigma_{L-1}}{\sigma_{L}}>1\)

- Train noise conditional score estimator \(s_{\theta}(\tilde{\vx},\sigma_{i})\) for every

level

- noise distribution \(p_\sigma(\tilde{\vx}|\vx)=\mN(\vx,\sigma^{2}\mI)\)

- noise level objective \[l(\theta,\sigma)\triangleq J_{\mr{DSM}(\theta,\sigma)}= \frac{1}{2}\E_{p_{\sigma}(\tilde{\vx}|\vx)p(\vx)}\br{ \norm{s_{\theta}(\tilde{\vx},\sigma) + \frac{\tilde{\vx}-\vx}{\sigma^{2}}}^{2}}\]

- unified objective using an objective “weight” \(\lambda(\sigma)>0\) \[\mL\paren{\theta,\tub{\sigma_{i}}_{i=1}^{L}}\triangleq \frac{1}{L}\sum_{i=1}^{L}\lambda(\sigma_{i})l(\theta,\sigma_{i})\]

- set \(\lambda(\sigma)=\sigma\)

- empirically showed \(\lambda(\sigma)l(\theta,\sigma)\) to be independent of \(\sigma\)

- The final sample of each noise level LD process initializes the next process

- Additionally \(s_{\theta}(\tilde{\vx})\) is likely well estimated in the traversed regions at the different noise levels

- Samples from \(q_{\theta}(\vx)\) by running several “MCMC-like” chains

Practical Considerations

- Step size \(\alpha_{i}\)

- Should decrease over time to ensure convergence welling2011bayesian

- Held fixed at each noise level for NCSNs

- Number of steps \(T\)

- Noise levels \(\tub{\sigma_{i}}_{i=1}^{L}\)

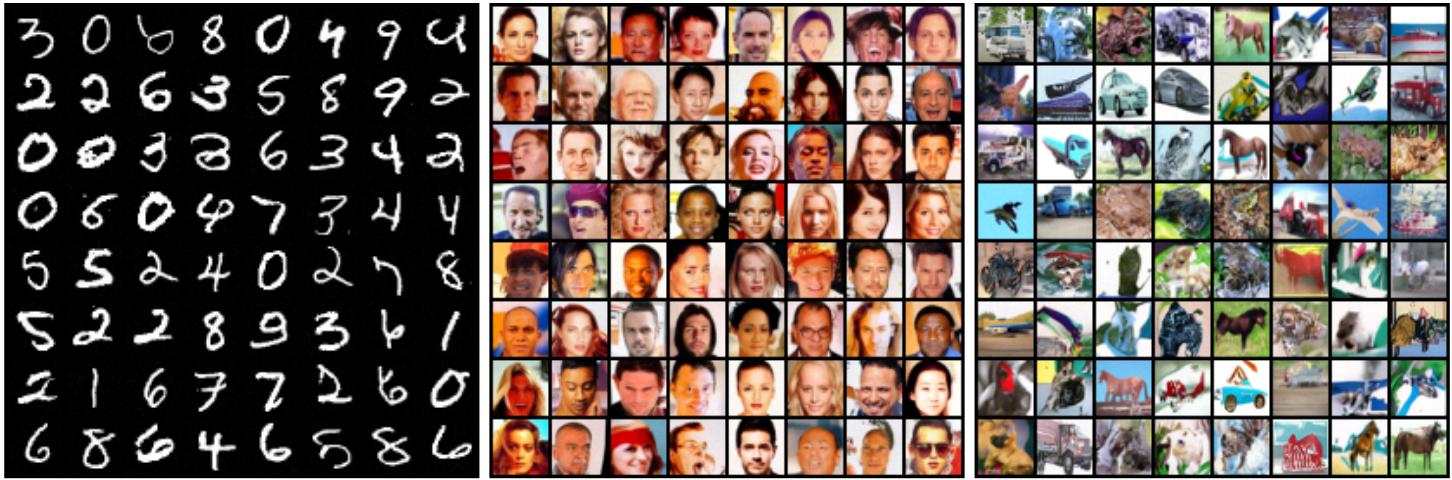

Experiments

Experimental Setup

- \(\tub{\sigma_{i}}_{i=1}^{10}=\tub{1,\dots,0.01}\)

- \(T=100,~\epsilon=2\times10^{-5}\)

MNIST, CelebA and CIFAR-10

- CelebA was center cropped to \(140\times140\) and resized to \(32\times32\)

- state-of-the-art inception score of \(8.87\) on CIFAR-10

Figure 5: Random samples using annealed Langevin dynamics for a) MNIST b) CelebA c) CIFAR-10

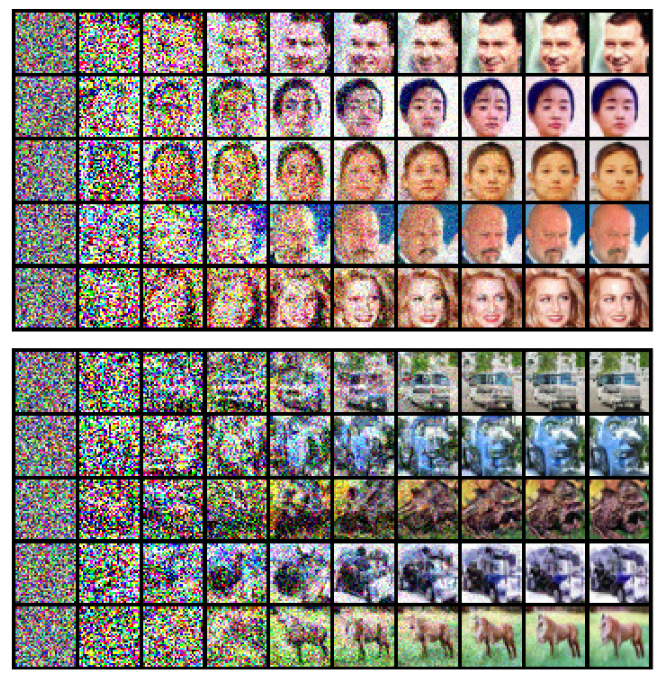

Sampling Dynamics

Figure 6: Intermediate samples of annealed Langevin dynamics.

Compared to other Methods

Conclusion

- New likelihood-free generative modeling framework

- Reach state-of-the-art inception score on CIFAR-10

- Not clear how well this methods scales to higher dimensions